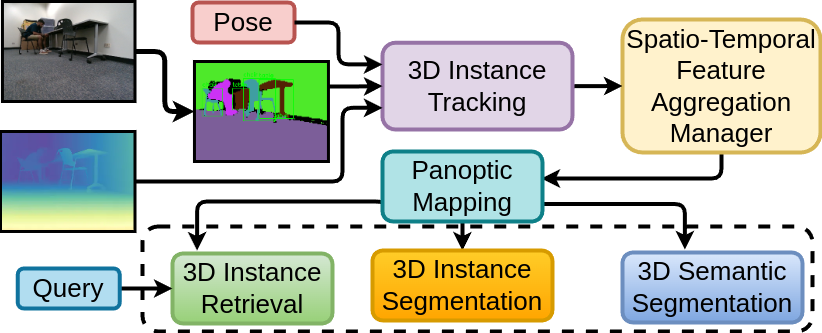

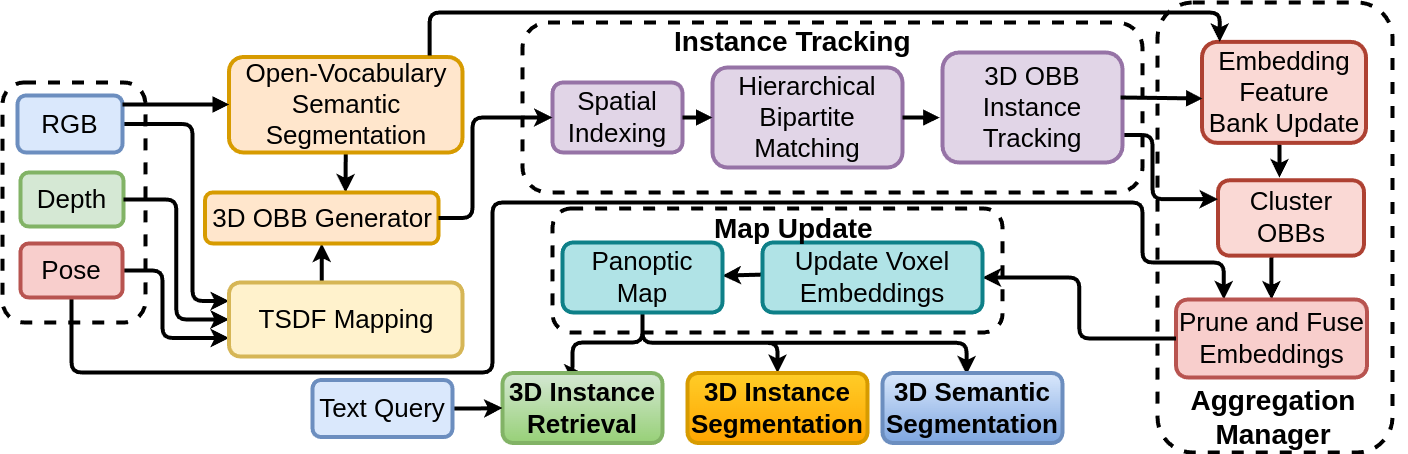

System Overview

Control/Robotics Research Laboratory (CRRL), Department of Electrical and Computer Engineering, NYU Tandon School of Engineering

Mapping and understanding complex 3D environments is fundamental to how autonomous systems perceive and interact with the physical world, requiring both precise geometric reconstruction and rich semantic comprehension. While existing 3D semantic mapping systems excel at reconstructing and identifying predefined object instances, they lack the flexibility to efficiently build semantic maps with open-vocabulary during online operation. Although recent vision-language models have enabled open-vocabulary object recognition in 2D images, they haven’t yet bridged the gap to 3D spatial understanding. The critical challenge lies in developing a training-free unified system that can simultaneously construct accurate 3D maps while maintaining semantic consistency and supporting natural language interactions in real time. In this paper, we develop a zero-shot framework that seamlessly integrates GPU-accelerated geometric reconstruction with open-vocabulary vision-language models through online instance-level semantic embedding fusion, guided by hierarchical object association with spatial indexing. Our training-free system achieves superior performance through incremental processing and unified geometric-semantic updates, while robustly handling 2D segmentation inconsistencies. The proposed general-purpose 3D scene understanding framework can be used for various tasks including zero-shot 3D instance retrieval, segmentation, and object detection to reason about previously unseen objects and interpret natural language queries.

Integrates vision-language models with 3D reconstruction for open-vocabulary recognition of diverse object categories without requiring additional training.

CUDA-accelerated processing at 103ms/frame (4× faster than comparable methods) with optimized algorithms for efficient mapping without global optimization.

Efficient O(log n) object association using spatial hierarchies with Hungarian matching for improved tracking through occlusions and viewpoint changes.

Instance-level semantic embedding fusion across frames, guided by hierarchical object association for consistent identity tracking in dynamic environments.

Maintains up to three semantic embeddings per object with confidence-based pruning to handle ambiguous interpretations in complex, cluttered scenes.

TSDF-based approach combining volumetric reconstruction with semantic embedding updates to address 2D segmentation inconsistencies during 3D mapping.

Open-vocabulary segmentation masks are back-projected to 3D and clustered with DBSCAN to handle occlusions. Objects are tracked via R-tree spatial indexing and Hungarian bipartite matching, with incremental OBB updates to refine geometric estimates as new observations arrive.

Features from each mask are pooled to form semantic embeddings in a continuous representation space. Up to three embeddings per object are maintained with confidence scores, using a similarity-based fusion mechanism to handle ambiguous interpretations and resolve them over time.

A TSDF-based representation combines both geometric and semantic information at the voxel level. Each voxel stores signed distance, color, instance labels, and a histogram of observations, updated incrementally with GPU acceleration to maintain geometric-semantic consistency.

RAZER maintains instance-level bounding boxes and voxel-level labels, providing complete 3D segmentation that updates in real-time as new viewpoints become available. This enables applications like robotic manipulation that require precise object boundaries.

Our framework performs open-vocabulary 3D semantic segmentation with state-of-the-art performance, nearly doubling the effectiveness of previous approaches while preserving fine-grained details across diverse object categories.

The open-vocabulary embeddings enable text-based object search in 3D environments. Natural language queries are processed through the same vision-language model to retrieve semantically similar objects without requiring class specific training.

@article{patel2025razer,

title={RAZER: Robust Accelerated Zero-Shot 3D Open-Vocabulary Panoptic Reconstruction with Spatio-Temporal Aggregation},

author={Patel, Naman and Krishnamurthy, Prashanth and Khorrami, Farshad},

journal={arXiv preprint arXiv:2505.15373},

year={2025}

}